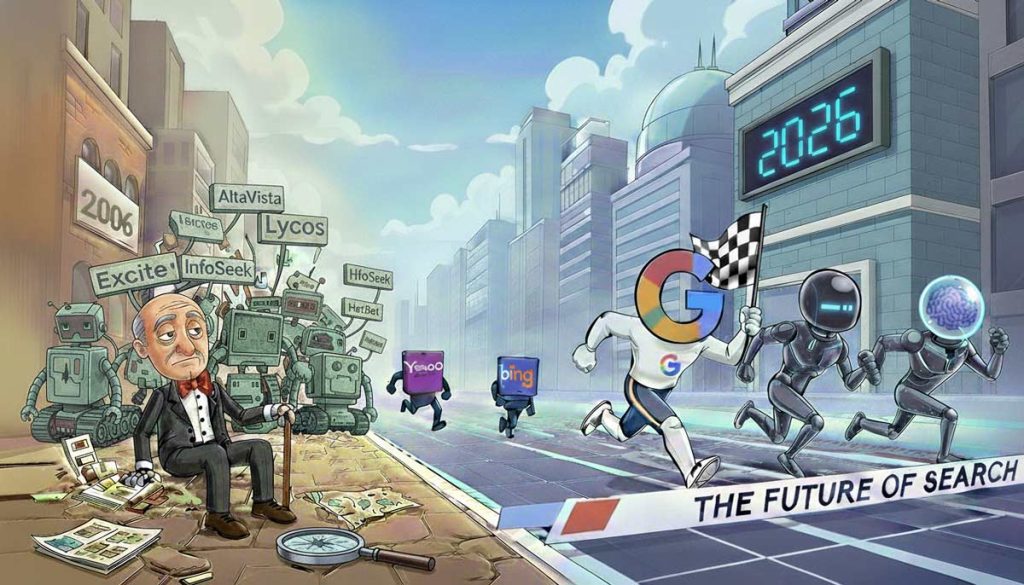

We’re Back Where We Started: Optimizing for Different Search Engines Again

There’s something oddly familiar about the current moment in search.

For anyone who’s been in SEO or digital marketing long enough, this feels like déjà vu. Years ago, optimizing for search meant understanding the quirks of each engine. Yahoo behaved differently than Ask Jeeves. Bing wanted something different than Google. You couldn’t assume a single strategy would work everywhere, and “ranking” meant adapting to multiple systems at once.

Over time, that fragmented landscape collapsed. Google became the clear winner, not because it was perfect, but because user adoption consolidated there. SEO standardized around one dominant engine, and for a long stretch, optimizing for search mostly meant optimizing for Google.

Now, thanks to AI-driven answer engines like Google AI Overviews, ChatGPT, Claude, Perplexity, and others, we’re right back where we started.

This shift is explored in detail in the paper “Generative Engine Optimization: How to Dominate AI Search” by Q. Chen, J. Chen, H. Huang, Q. Shao, and colleagues, which notes that:

“Different generative engines rely on distinct retrieval signals and source preferences, meaning that content visibility depends on how well inputs align with each system’s specific synthesis and ranking mechanisms.”

From Blue Links to Synthesized Answers

What’s changed isn’t the need to optimize—it’s what we’re optimizing for.

Instead of blue links and ranked result pages, we now have AI systems that synthesize answers. Google AI Overviews, ChatGPT, Claude, Perplexity, and others don’t just retrieve information; they interpret it, summarize it, and re-present it in their own voice.

And critically, they don’t all work the same way.

Each platform pulls from different data sources, weights authority differently, and decides what to include—or exclude—based on its own internal logic. There is no single “AI ranking system.” There are several, running in parallel.

That’s what makes this moment feel so familiar.

From an SEO and content visibility perspective, this also introduces a new layer of language into the conversation. We’re no longer just talking about SEO in the traditional sense, but about optimizing for Google AI Overviews, optimizing for ChatGPT, optimizing for Perplexity, and understanding how different AI answer engines surface and synthesize information. The idea of “ranking” is still present, but it’s expressed through inclusion, citation, and synthesis rather than a numbered list of results.

Different Engines, Different Biases

One of the most important shifts with AI-driven answer engines is that each system evaluates inputs differently when deciding what to show.

Traditional search engines largely worked from a shared set of signals—links, relevance, freshness, and authority—even if they weighted them differently. Generative answer engines expand that model. They blend retrieval, synthesis, and presentation into a single step, and the inputs feeding that process vary meaningfully by platform.

Why Do Different Answer Engines Favor Different Inputs?

Some engines lean heavily on their own ecosystems and first-party data, using internal signals to reinforce trust and relevance. Others prioritize earned media, external citations, and high-authority third-party validation. Some models favor structured, academic, or long-form material that supports careful synthesis, while others are optimized to summarize broad web content quickly and conversationally.

Why Do Certain Types of Inputs Perform Better on Specific AI Platforms?

The practical implication is that visibility is no longer just about what you publish, but how that information aligns with each engine’s preferred inputs. Content that performs well in one system may underperform in another—not because it lacks quality, but because it doesn’t match the signals that particular engine relies on when assembling answers.

This is a fundamental return to platform-specific optimization, just expressed through synthesis models instead of ranking algorithms.

To make this more concrete, the differences between platforms become clearer when you look at what each system tends to rely on as its primary inputs:

| AI Platform | Primary Data Tendencies | How This Shows Up in Answers |

|---|---|---|

| Google AI Overviews | Google ecosystem (Search index, YouTube, Reddit, authoritative web sources) | Feels like an extension of traditional search, blended with summaries |

| ChatGPT | Broad web content, licensed data, general authoritative sources | More narrative, synthesized explanations |

| Claude | High-quality, factual, often academic or long-form sources | Conservative, detail-oriented summaries |

| Perplexity | Earned media, news outlets, journals, high-authority third parties | Citation-heavy, source-forward answers |

Just like the early days of search, each platform has its own tendencies.

Some lean heavily on their own ecosystems and first-party signals. Others favor earned media, citations, and third-party authority. Some prioritize long-form, academic, or highly factual sources, while others cast a much wider net across the general web.

The result is a fractured optimization landscape. Content that surfaces prominently in one AI engine may be ignored entirely by another. Being “visible” is no longer a binary state—it’s engine-specific.

This mirrors the past almost perfectly. We’re once again asking familiar questions:

- Which platforms actually matter for our audience?

- Where are people discovering information now?

- What signals does each system reward?

User Adoption Will Decide the Winners (Again)

If history is any guide, not all of these platforms will matter equally in the long run.

In the early 2000s, plenty of search engines existed. Only one became dominant. The same pattern will likely repeat here, shaped less by technical superiority and more by distribution, habit, and ease of use.

Some AI tools require users to change behavior—new interfaces, new workflows, new destinations. Others are embedded directly into experiences people already use every day. That distinction matters far more than most ranking factors ever will.

Optimization has always followed adoption, and this era will be no different.

How This Might Be Used in Practice: Follow Your Users

All of this matters less in the abstract and more when you consider who your audience actually is and how they look for information.

How the General Public Is Likely to Use AI Answer Engines

For the general public, discovery will likely concentrate around the most familiar and frictionless experiences. Platforms like Google AI Overviews and, increasingly, ChatGPT are positioned to capture this behavior simply because they are easy to access, broadly understood, and integrated into tools people already use. For many users, AI answers will feel like a natural extension of search rather than a new destination.

How Academic or Research-Oriented Users May Behave Differently

More academic, technical, or research-driven audiences may behave differently. These users are often more comfortable validating sources, reviewing citations, and exploring deeper context. As a result, answer engines that emphasize earned media, journals, long-form analysis, or explicit sourcing may play a larger role in how information is discovered and trusted.

Why Intent Still Shapes Platform Choice

It’s also reasonable to expect continued fragmentation by intent. Quick, high-level questions may default to conversational summaries, while complex or high-stakes topics push users toward platforms that foreground sources, methodology, or primary references.

The practical takeaway isn’t to optimize everywhere equally, but to understand where your users are most likely to seek answers and which inputs those platforms tend to reward.

A Conceptual Shift, Not a Tactical Panic

This isn’t a call to abandon traditional content practices or to chase every new AI platform equally. It’s simply an observation that the one-size-fits-all era of optimization is ending again.

We’re entering a period where visibility is fragmented, authority is interpreted differently by each system, and success depends on understanding how answers are synthesized—not just where content ranks.

In other words, we’ve come full circle.

Different engines. Different rules. Same challenge.

And if the past is any indication, the platforms that feel inconvenient or unfamiliar today may not be the ones that matter tomorrow.